Recently, DeepSeek has rapidly gained popularity thanks to its exceptional performance, drawing widespread attention from developers and enterprises alike. As a cutting-edge AI large model, DeepSeek integrates the latest deep learning technologies, massive-scale training data, and a high-performance computing architecture. It is designed with a strong focus on natural language processing and large-scale data reasoning, empowering businesses and developers with robust AI capabilities.

However, in scenarios such as intelligent robotics, industrial automation, and autonomous devices, where low latency, limited bandwidth, and high security are critical, local deployment of AI models on edge devices becomes essential. To address this, this article highlights the deployment and application process of the DeepSeek-R1 model using our self-developed RTSS-X102 Smart Box, equipped with the Jetson Orin 64GB module.

The RTSS-X102 is an intelligent computing device purpose-built for the NVIDIA Jetson AGX Orin platform. It is widely adopted in scenarios that demand high-performance computing and advanced AI inference. Designed to meet the rigorous requirements of edge computing, artificial intelligence, and embedded applications, the RTSS-X102 delivers powerful edge AI capabilities across a wide range of industries. The key features are listed below:

Compatible with multiple NVIDIA Jetson Orin modules, the RTSS-X102 delivers outstanding AI inference and computing power, making it ideal for complex deep learning and computer vision tasks.

Equipped with 4 USB ports, dedicated Gigabit Ethernet, HDMI, and more—offering flexible peripheral and sensor integration to meet diverse application needs.

Supports M.2 and Mini PCIe slots for expandable storage, wireless communication modules, or additional function boards, enhancing system flexibility and scalability.

Built with an industrial-grade, custom-molded enclosure for rapid deployment and long-term availability. Designed for stable operation in harsh environments, suitable for robotics, UAVs, intelligent surveillance, and more.

Features a fanless passive cooling system with a large heat dissipation surface. Supports 24/7 continuous operation with minimal maintenance, ensuring long-term stability and low operating costs.

The featured RTSS-X102 system is powered by the Jetson Orin 64GB module, equipped with a 12-core Arm Cortex-A78AE CPU, a 2048-core NVIDIA Ampere GPU, and 64 Tensor Cores. Delivering up to 275 TOPS of AI performance and paired with 64GB of high-speed LPDDR5 memory, it offers a powerful and stable computing foundation for running DeepSeek with maximum efficiency and reliability.

Prior to deployment, the system image and BSP package were flashed according to the product installation guide. For multi-model deployment need, it is recommended to pre-install an M.2 SSD to store models and data locally on the drive.

After the system boots up, run the following command to install Ollama, which is a containerized platform for running large language models locally. To deploy and run models such as DeepSeek-R1 or LLaVA, the proper configurationis required.

# Install Ollama curl -fsSL https://ollama.com/install.sh | sh

Once installation is complete, You can run the following commands to pull the DeepSeek models for downloading DeepSeek-1.5B, 7B, 8B, 14B, and 32B. Note: If you want to store the models on an external SSD, be sure to mount the SSD partition in advance and specify the model storage path by setting the appropriate environment variable in the ollama.service configuration.

# Download DeepSeek-R1 models one by one ollama pull deepseek-r1:1.5b ollama pull deepseek-r1:7b ollama pull deepseek-r1:8b ollama pull deepseek-r1:14b ollama pull deepseek-r1:32b

Once the model is successfully deployed, you can start using it directly from the terminal.

# run deepseek-r1:8b, you can add--verbose to see tokens info. ollama run deepseek-r1:8b

Ollama provides a terminal-based interface designed for tech enthusiasts, but it may not be intuitive for general users. That's where Open WebUI comes in—it serves as a user-friendly wrapper for large models. Open WebUI is an open-source web interface framework that resembles the style of ChatGPT. Users can easily enter a URL in their browser, select the model they wish to use, and start interacting with it through text.

Running Open WebUI requires a Docker environment, which can be installed with a single command.

# Install Docker curl -fsSL https://get.docker.com | bash

After Docker is installed, run the following command to pull and run the Open Web UI image. Note:The -v flag is used to bind local directories, allowing the container and host machine to share files or folders. Please configure it according to your specific needs.

docker run -d --network=host \ -p 8080:8080 \ --name open-webui \ -v open-webui:/app/backend/data \ -v /home/nvidia/ssd/ollama_models:/app/model \ -e ENABLE_OLLAMA_API=True \ -e OLLAMA_BASE_URL=http://127.0.0.1:11434 \ --restart always \ ghcr.io/open-webui/open-webui:main

Once that's done, you can experience the DeepSeek model's inference capabilities by navigating to http://(target IP address):8080 on your local network.

The token processing rates for models of various sizes during inference on the RTSS-X102 Smart Box are shown in the table below. As demonstrated, the overall performance is outstanding, with impressive processing power.

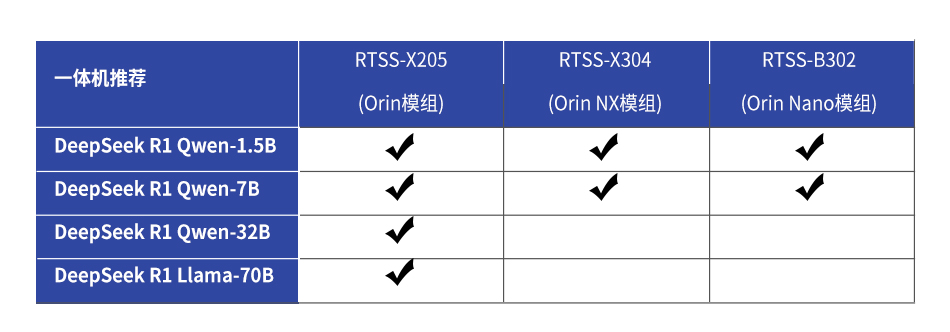

Below are the product models our company recommends for deploying the DeepSeek model at the edge. If you have any related inquiries, please don't hesitate to contact us. We are committed to providing comprehensive support and service.

Realtimes Beijing Technology Co. Ltd. specializes in delivering highly customized carrier board and smart box solutions, tailoring every aspect of the hardware and software systems to meet the specific needs of our clients. We also provide comprehensive technical support to ensure the seamless and precise execution of projects.

Contact: James

Service Hotline: 400-100-8358

Email: info@realtimes.cn

Add: 11th Floor, Block B, 20th Heping Xiyuan, Heping west street, Chaoyang District, Beijing 100013,P.R.China